In this article, we will highlight a number of spoofing and phishing strategies that can be employed by external attackers to target an organization using Teams in the initial access phase of the kill chain.

In this article, we will highlight a number of spoofing and phishing strategies that can be employed by external attackers to target an organization using Teams in the initial access phase of the kill chain.

We previously wrote two articles about phishing via Slack, the first for the initial access kill chain phase and the second for lateral movement and persistence. For those interested, the links are below:

Some readers asked what this looks like for Microsoft Teams and so we decided to write this article to show what similar attacks look like via Teams.

We’ll primarily be using the following SaaS attack techniques chained together:

Why focus on instant messengers?

If you’ve read either of the previous articles on Slack, you can skip this introductory piece and jump straight to the next section.

They aren’t new, however, the original focus of IM apps was on internal communication and phishing and social engineering attacks are often external. Email remained the standards-based protocol that enabled external communication no matter what email vendor was in use. In recent years, however, instant messengers (IM) have become the primary method of communication for many businesses. I wanted to focus on IM here because if that’s where employees are communicating, it’s the best place to launch attacks against them. Even better, there’s a history of users placing a higher degree of trust in IM platforms than email, so it becomes a potentially easy target.

While IM platforms were initially used solely for internal communications, organizations quickly realized that IM platforms could be used to communicate with external groups, individuals, freelancers, and contractors, with the hope of fewer emails and more instant communications.

We now have Slack Connect and Microsoft Teams external access to support this, with Slack Connect introduced in June 2020 and Teams introducing it in January 2022. This external access has increased the attack surface of these platforms considerably.

Despite decades of security research, email security appliances and user security training, email-based phishing and social engineering is still commonly successful. Now we have instant messenger platforms with:

Richer functionality than email,

Lacking centralized security gateways and other security controls common to email and

Unfamiliar as a threat vector to your average user compared with email.

There’s also a sense of urgency associated with IM messages due to the conversational nature compared with emails. Combined with a history of increased trust, we have the ingredients for increased social engineering success.

There’s been an uptick recently in IM-based phishing research and real-world attacks, particularly for Microsoft Teams. For example, check out the great research from JumpSec on bypassing attachment protection for external Teams messages, the offensive tool TeamsPhisher and attacks distributing DarkGate malware via Teams.

IM user spoofing

The first consideration is the spoofing aspect. We’ve all seen techniques for spoofing emails, but there are many security controls like Sender Policy Framework (SPF) that can prevent direct spoofing of domains and email security gateways that can flag suspicious domains.

Those security controls don’t exist for IM, so we have new options for spoofing.

External IM invites

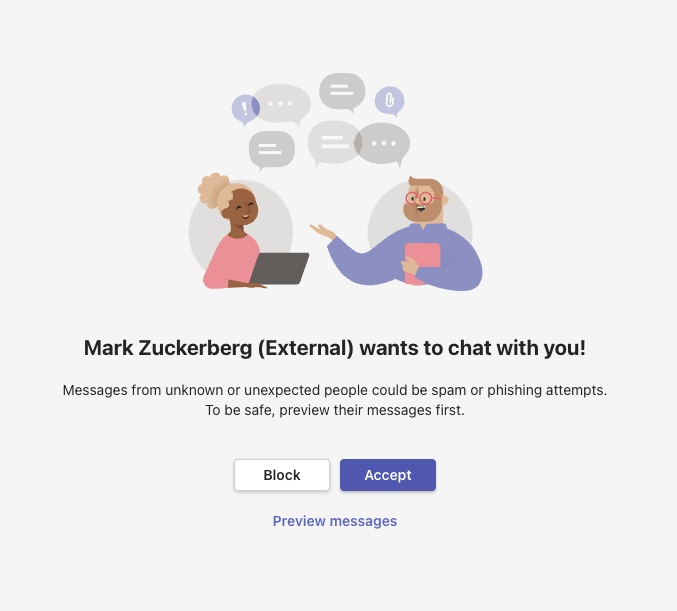

IM applications often make use of friendly display names for organization and employee names as well as user-chosen handles. These often don’t need to be unique either. One interesting aspect with Microsoft Teams is the behavior of this differs depending on if the external message request is received from a Teams organization or an individual Microsoft account user using Teams.

External invite from individual Microsoft account

When messaging from an individual Microsoft account, we can choose the name to represent ourselves but we can’t choose an organization name.

This is somewhat neutral in this case as we can’t spoof a legitimate organization name but the invite doesn’t show the real email address of the attacker’s account in this case and simply displays “External” as an indicator. Additionally, when messages are received from the external user the profile photo shown by the user does not show so we can’t spoof a known profile photo either.

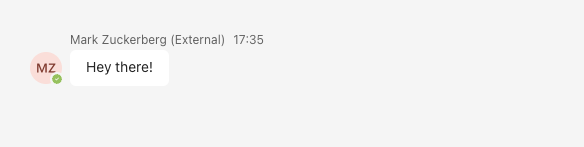

External invite from Teams organization

On the other hand, if we initiate an external connection request from a Teams organization then we can control our organization name but this is not of use to us in this case. This is because the connection request actually shows the email address of the user account. Therefore, we need to register a convincing email domain and we are relegated back to something much closer to standard email social engineering techniques.

In this case, it seems better to use an individual Microsoft account with teams to spoof external invites as it’s not easy for a target user to tell if the user or organization requesting to connect is legitimate when they first receive this invitation.

Whatever method is used, there’s also a curiosity incentive - you can’t see a first message from the user, so it’s tempting for the target user to accept in order to see the message, even if they then ignore it. This is one case where Teams actually provides an interesting defensive ability - it’s possible for the user to preview the message that has been sent without formally accepting the invitation first.

Whilst the initial invite spoofing options with Teams are not ideal from an attacker’s perspective (Slack certainly provides more interesting spoofing capabilities) there are certainly options to experiment with and it still allows for some capabilities not possible with email spoofing, such as hiding the email address and showing a display name only.

However, all an attacker needs to do is get a first connection and they have cleared the first hurdle. They can now launch attacks either immediately or in future. The conversational nature of IM apps makes it much easier to ramp up the conversation gradually towards an actual attack using a malicious link or attachment that is more likely to succeed.

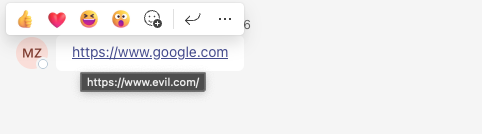

Link preview spoofing

Another key issue is link preview spoofing. HTML allows a variety of ways to specify hyperlinks. In email, secure email gateways will often alert or block commonly abused types, such as forging a different URL as the link display text to what the underlying link points to. For example, an attacker could show the link as https://www.google.com but direct it to https://www.evil.com when it is clicked. Secure email gateways often perform a lot of other analysis of links, including domain analysis and active crawling to identify common phishing attacks.

On IM applications, however, this same standard of link analysis is not always present and the widespread introduction of link unfurling/previewing has also given additional options for spoofing links to hide their true source and increase social engineering success.

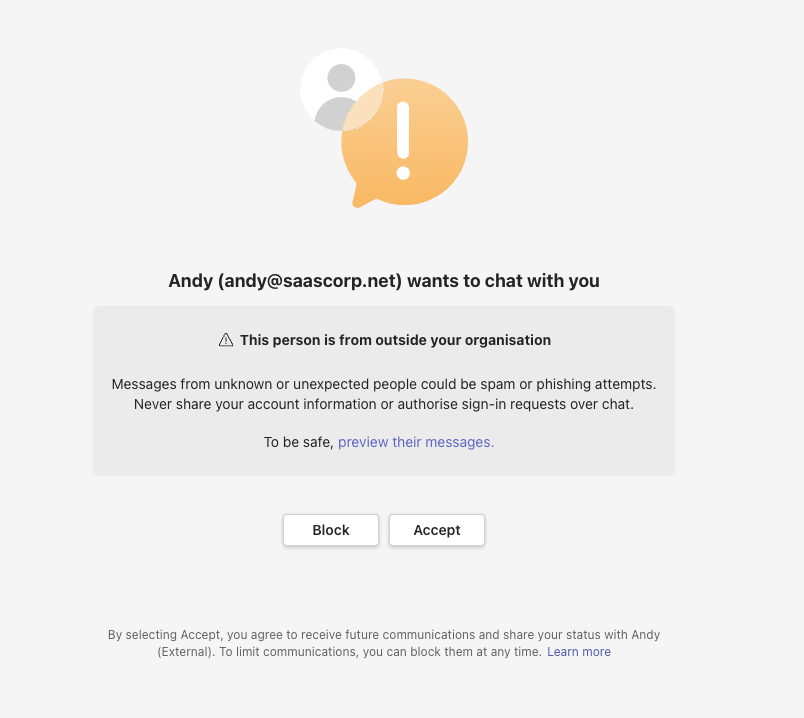

Traditional link forging

We’ll start with a common traditional link forging scenario to see how Teams handles that, then show how link previews change the threat.

Here, we can see forging a link is permitted by Teams. A hover-over for a few seconds will show the real URL, but there is nothing stopping an attacker forging fake links if the user just clicks them without checking. This is something commonly prevented by secure email gateways and is something that generates an explicit warning when performed using Slack.

We can of course use friendly text to construct a link to our malicious domain too, something often used in email-based phishing. However, it still shows the real URL on hover-over and so it’s arguably of less use in teams when we can straight up forge fake links. A user is much less likely to check the hover-over if they think they’ve already seen the real URL as in the case of the forged link shown previously.

Abusing link previews

It gets more interesting when we use links that Teams is able to unfurl to provide a link preview. Here we’ll show a legitimate example of posting one of our own blogs where Teams helpfully unfurls the URL and gives some context to the link as a preview:

This is very useful for the user and, despite the fact you can still see the domain as part of the preview, the rest of the preview dominates the display and gives a sense of legitimacy. The user can also hover-over the link to see the full URL, but they have much less reason to do that when seeing the link preview and if they notice the domain that’s displayed too.

So, how can we use this scenario maliciously?

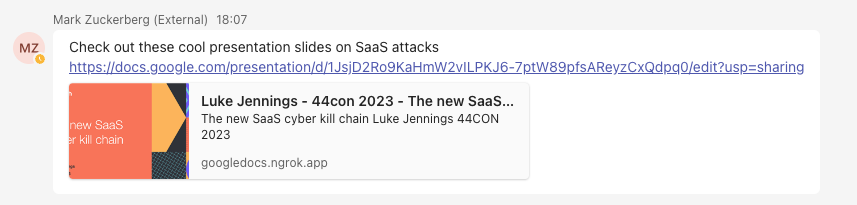

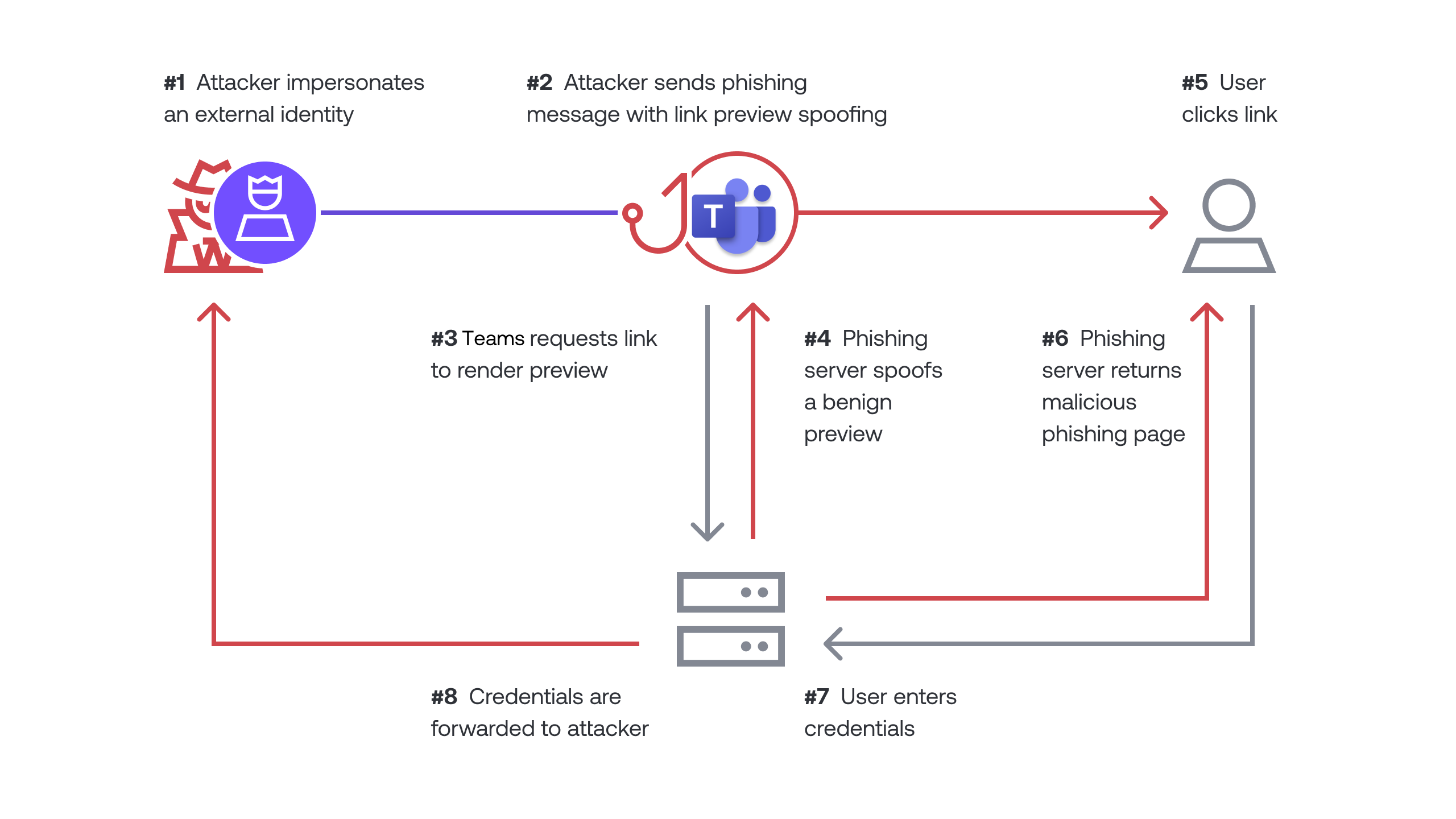

The obvious attack scenario is to forge a different link preview for Teams than what is given to the user when they click the link. Then when the user clicks the link, they’ll be directed to our phishing page instead.

We can do this by performing user agent specific processing of web requests. For example, Teams unfurling uses a user agent like the following:

User-Agent Mozilla/5.0 (Windows NT 6.1; WOW64) SkypeUriPreview Preview/0.5 skype-url-preview@microsoft.comTherefore, without even requiring much sophistication, we can use some simple python code to perform a redirect to a legitimate source when our web request handler sees this user agent. However, when a target user visits using a normal web browser we instead return a malicious page. The example python code below redirects to benign content for a Teams preview, while serving malicious content otherwise:

from http.server import HTTPServer, SimpleHTTPRequestHandler

class MyHandler(SimpleHTTPRequestHandler):

def do_GET(self):

for header, val in self.headers.items():

if header == "User-Agent":

print(header, val)

if val.startswith("Slackbot-LinkExpanding") or "SkypeUriPreview" in val or "Google-PageRenderer" in val:

self.send_response(301)

self.send_header('Location', 'https://docs.google.com/presentation/d/1JsjD2Ro9KaHmW2vILPKJ6-7ptW89pfsAReyzCxQdpq0/edit?usp=sharing')

self.end_headers()

return

print(header, val)

return super(MyHandler, self).do_GET()

httpd = HTTPServer(('localhost', 8000), MyHandler)

httpd.serve_forever()

If you’ve read our previous Slack article, you’ll recall that we also minimized the link text to a period so as to reduce the chances of the user performing a hover-over to see the real URL, whereas the link preview itself is much larger and clickable.

The problem with Teams is that the domain portion of the link shows as part of the link preview as we saw above, which isn’t ideal as an attacker. Obviously, in a real attack we would register as convincing a domain as we could but we’d still rather the user either does not see it or sees a genuinely legitimate domain instead.

However, we also saw before that, unlike Slack, Teams allows full link forging without a warning. Hyperlinks are blue highlighted and much more prominent and so our attack strategy is best focused on presenting a forged legitimate URL that draws the user’s attention, along with a forged link preview and distracting them from the faded real domain that shows below.

The end result of this is that the user sees both a legitimate URL and a nice friendly link preview legitimately produced by Teams and Google Docs in real time, whereas if they click the link they’ll be taken to our phishing page instead.

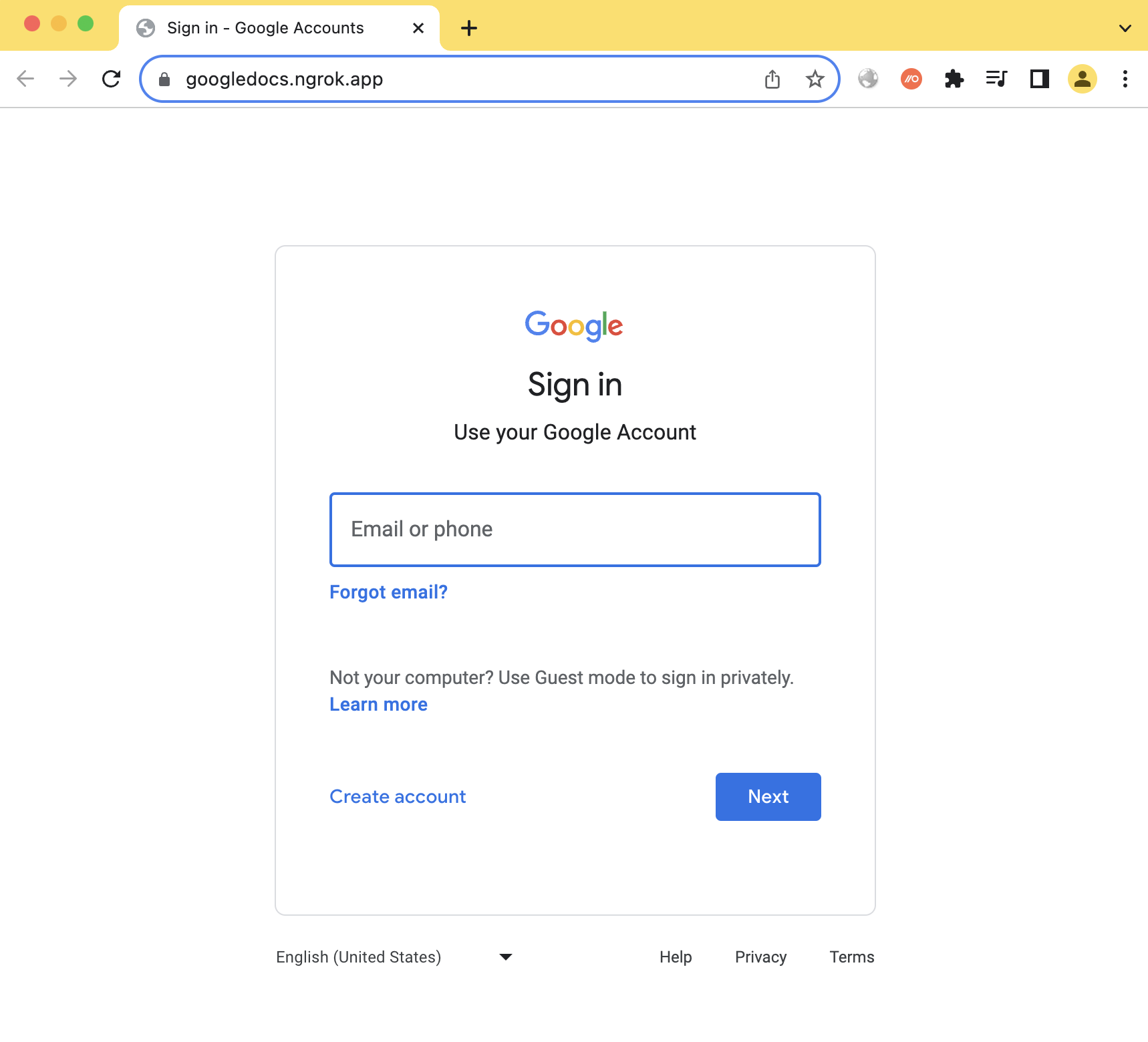

In this case, we have shown a Google style phishing page as an example for harvesting credentials. Hopefully, the user will assume their Google Docs session expired and then re-enter their credentials. See what the target user would see below:

As we can see, the phishing message generated in this case is pretty convincing. It shows a legitimate link to Google docs that is highlighted and a legitimate link preview too. The faded ngrok domain in the link preview is very easy to miss. However, clicking the link will take the user to our phishing page.

The diagram below shows how this attack works from a data flow perspective:

Cleaning you tracks

Ok, so let’s say an attacker has either successfully phished the target user or perhaps now the user is suspicious and likely contacting security or IT. One of the great benefits of IM apps is you can generally edit and delete messages, which can be abused by an attacker.

As an attacker, I could make a tiny change to my message to replace the malicious link with the legitimate link I was spoofing for the link preview if I got the sense the target was getting suspicious. Then, if an incident responder comes to investigate, the malicious link is now gone and the message itself appears almost identical, covering my tracks. Other than being able to see the message has been edited, it’s no longer easy to see this was a phishing attack or where the phishing link pointed to.

This is definitely a useful capability that isn’t usually possible with email phishing! See this minor change reflected below, making the original phishing message appear innocuous due to the replacement of the phishing URL with a legitimate URL. A careful observer will notice that the message appears almost identical to the original, only now the faded domain in the link preview shows docs.google.com, instead of our malicious domain, since the link has been edited.

Impact

We’ve covered a lot of ground here, showing the chaining of external user spoofing attacks with link preview spoofing and also how to cover your tracks afterwards. It’s worth taking a step back and considering the key impact points:

IM apps like Teams are now external phishing and social engineering vectors, not just internal ones

User spoofing can be used in novel ways to enhance social engineering that employees may not be familiar with

Link spoofing techniques can make phishing links much harder to spot and so increase social engineering success

Malicious Teams messages can be modified later to replace the phishing link to cover up the attack

Conclusion

IM apps have become the default internal communication for most organizations now, but are now a common method of communication with external parties, as well. This means they’ll become a key battleground in both the initial access phase of compromises and the latter phases of lateral movement and persistence.

This also means organizations reliant on traditional email security gateways and email-based phishing training are likely to see the effectiveness of these controls decrease if attacks shift to the IM apps.

In this article, we highlighted a number of spoofing and phishing strategies that can be employed by external attackers to target an organization using Teams in the initial access phase of the kill chain.