Ingesting events using Azure Monitor and Microsoft Sentinel

Configure Azure Monitor and Microsoft Sentinel to allow for ingesting Push webhook logs. Then you can use Microsoft Sentinel to perform analytics and to configure alerting.

Overview of setup steps:

Create a Log Analytics workspace in Sentinel if you haven’t already.

Retrieve relevant keys from Log Analytics workspace.

Complete the integration in the Push admin console.

Once the integration is complete, a log table will automatically be created within Sentinel.

Important! Do not create a custom log table, as this will cause the integration to fail.

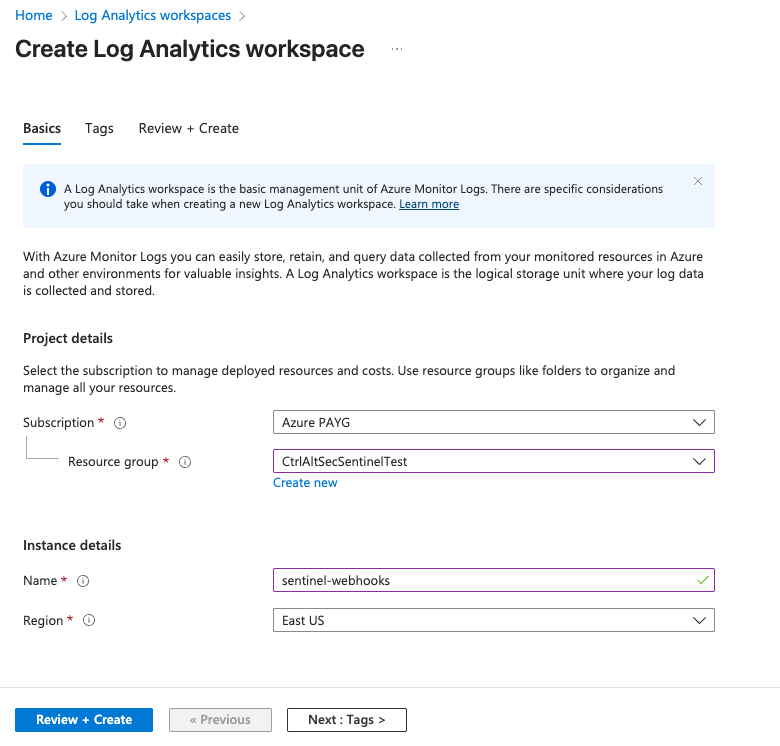

Create a Log Analytics workspace

First, determine if you already have an existing Log Analytics workspace configured within Sentinel. If you have an existing workspace, you can skip the following steps 1-8. Go to the section titled Retrieve keys from Log Analytics workspace and start there.

If you don't have an existing workspace, you'll need to create one. Here's how.

1. Navigate to Log Analytics workspaces and click +Create.

2. Select or create a Resource group with the appropriate permissions.

3. Click Review + Create.

4. Click Create.

5. Wait for the deployment to complete.

6. Next, you'll add the Log Analytics workspace to Sentinel by going to Sentinel, then select +Create.

7. Choose the Log Analytics workspace you just created.

8. Click Add.

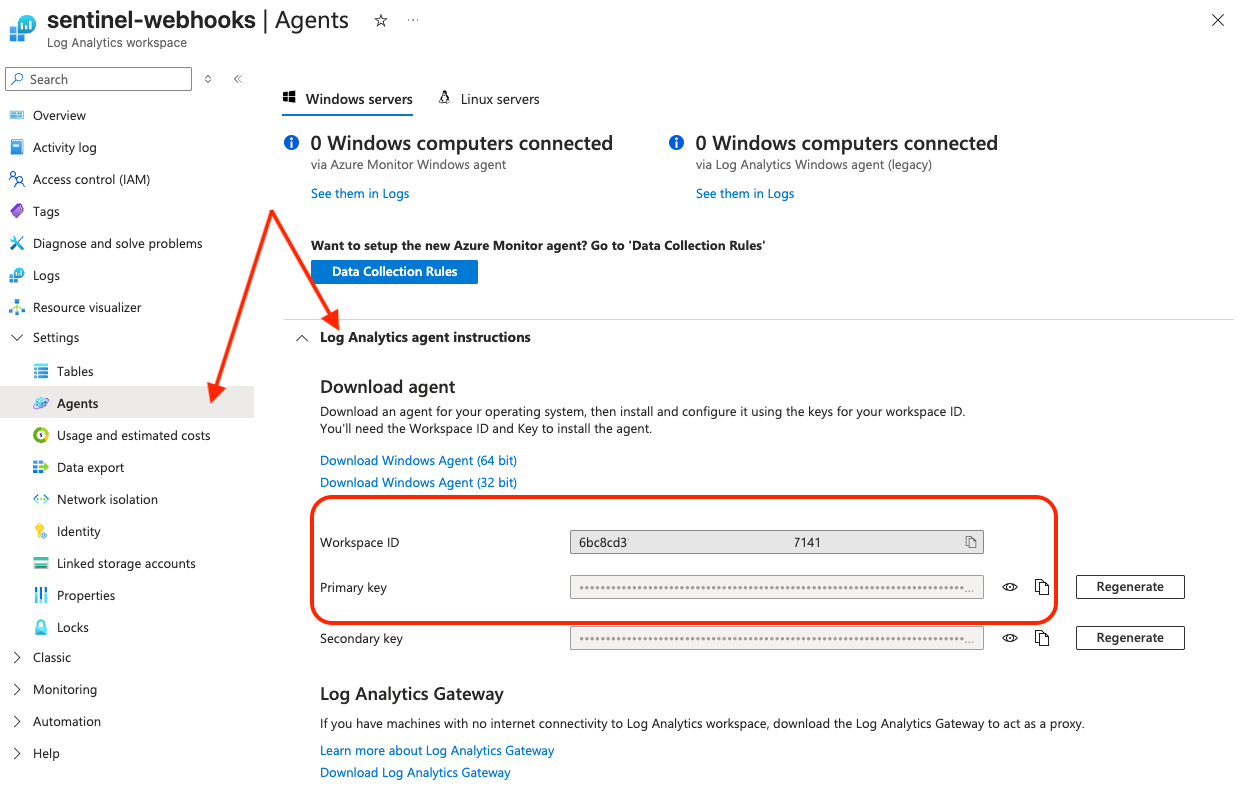

Retrieve keys from Log Analytics workspace

Once the workspace has been created, you're ready to retrieve keys from Azure.

Within Azure, go to Log Analytics workspaces.

1. Select your workspace.

2. Then go to Settings > Agents > Log Analytics agent instructions.

3. Copy the Workspace ID and Primary key.

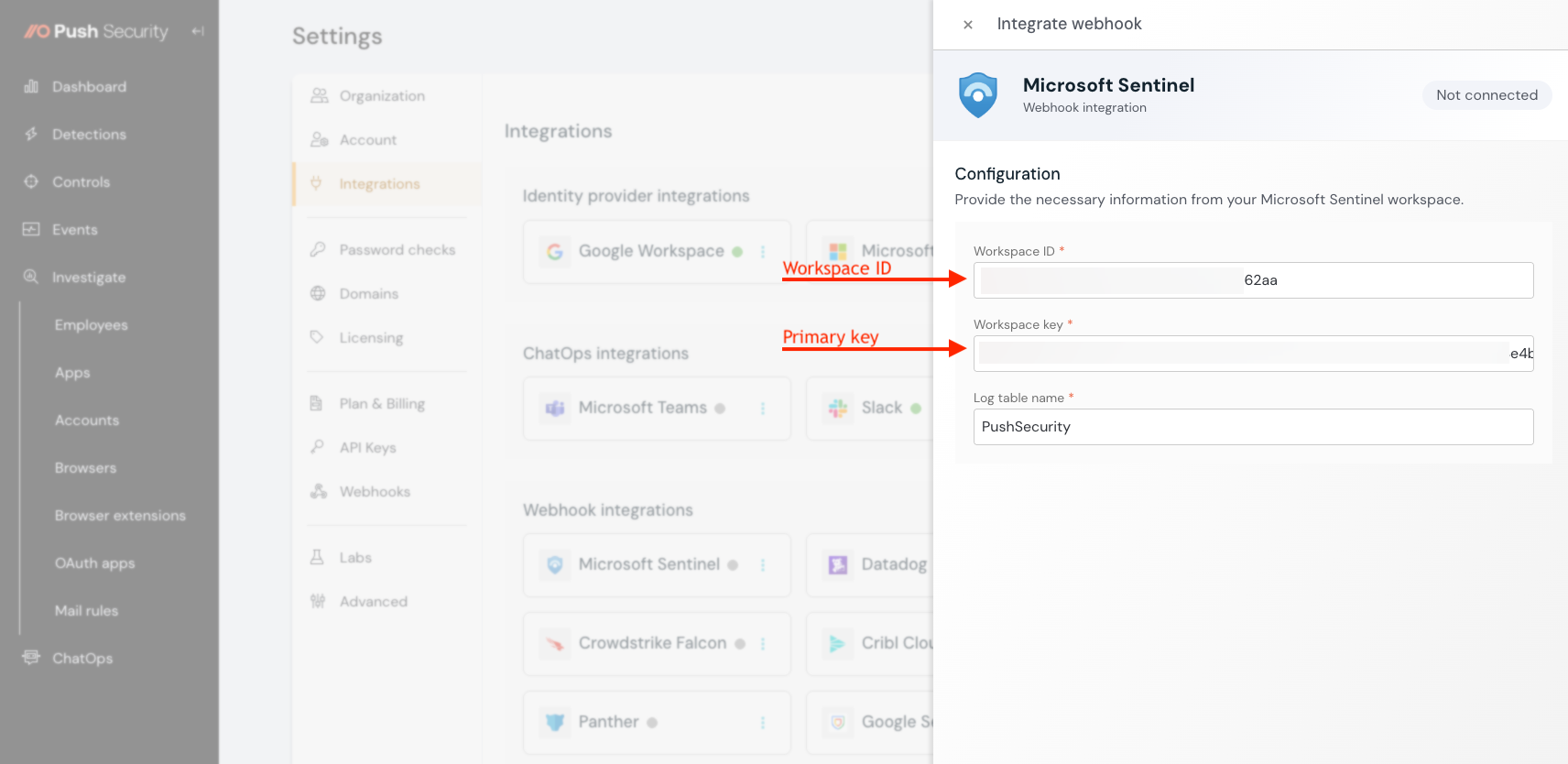

Configure the integration in Push

Finally, you need to set up the Microsoft Sentinel integration in the Push admin console. Go to Settings > Integrations and choose Sentinel.

Input the Workspace ID and Primary key (Workspace key), and create a new log table name.

Important! Do not use an existing log table name. This will cause the integration to fail. A table will be created automatically when you create a new log table name.

The new table will appear in Microsoft Sentinel under General > Logs within the new table you created. The table will automatically have “_CL” appended to it (e.g. PushSecurity_CL).