This blog is intended as a resource for other extension developers looking to improve the security of their extension in the wake of the Cyberhaven attacks.

This blog is intended as a resource for other extension developers looking to improve the security of their extension in the wake of the Cyberhaven attacks.

Inline with what was targeted in this campaign, our focus here is on the extension deployment process. All browser vendors stand to benefit from greater security in this area — we hope that sharing what we’ve learned is useful, and look forward to comments and feedback so we can collectively reduce the scope for attacks on browser extensions in the future.

TL;DR

In this blog, we’ll start with some background and walk through the “why” before discussing the key improvements that we feel are needed. But if you don’t care about the why or just want to cut to the chase, the key parts of defending against these attacks are:

Disable always-on access for all users to the browser extension store developer portals — you need to automate deployments through CI/CD to enable this.

Implement a multiparty approval process for extension deployments.

Secure your admin identities.

For details of how to do this practically, skip ahead to the “Recommended security architecture” section.

Background: The Cyberhaven incident

In December 2024, a campaign targeting browser extension developers was launched, and succeeded in compromising at least 35 Google Chrome extensions. Cyberhaven’s extension was the most notable of these, and the campaign has inherited their name.

The campaign targeted extension devs through the support email address listed on the extension stores, but notably, the consent phishing attack technique was used. While not a new technique, it has rarely been seen — especially given how powerful it is. Rather than a traditional credential and MFA phishing attacks which harvest credentials (or session tokens to bypass MFA), with consent phishing the attacker's goal is to trick the victim into granting them an OAuth token to perform actions on their behalf. In this case the permission or scope used by the attacker granted that token the ability to upload and publish new versions of the victim’s extension to the Chrome Web Store — which in this case included some backdoor code that executed commands that were dynamically configured by the attacker. For more in-depth information, see the excellent analysis by the Secure Annex team.

Because of the dynamic nature of the commands sent to backdoored extensions, it’s difficult to be sure what the impact was — but whatever the case was in this specific incident, it’s perhaps more useful to understand what the impact to users might be so we can work to mitigate future attacks.

The simple fact is that for most common extensions that operate across multiple sites (like ad-blockers etc.), using fairly typical permissions, a backdoor would likely be able to reach credentials and session tokens. This would mean an attacker could use a backdoored extension to get access to a user’s accounts on various websites. This poses a very high impact to users, and something that all extension developers should be focused on preventing.

How do we stop the next iteration of this attack?

Given the value of the data, the relative ease with which this attack was performed (vs. for example something like a browser 0-day), and the success of the attack, it seems very likely this type of attack will happen again. As we saw in 2024, the success of the attacks on Snowflake customers gave rise to a huge increase in infostealer attacks. Attackers are quick to identify areas of potential opportunity and capitalize on them.

As an extension user, you should be mainly worried about one of two scenarios;

The developer of the extension adds malicious code to an extension, they publish the update to the app store, your browser automatically updates, and malicious code runs in your browser

The developer of your extension is attacked, and the attacker gains access to publish an updated version of the extension to the app store, and uses this to push an update that includes their backdoor, your browser automatically updates, and malicious code runs in your browser

However, since we’re writing this for honest extension developers, and these attacks targeted the second scenario, that’s what we’ll be focussing on.

The challenge then is to make sure that only legitimate developers can push updates to the extension store. Easy to say, harder to do in the real world.

Primer on extension stores and the publication process

As a light intro for folks that aren’t extension developers but are still interested, here’s a very brief description of this process. It’s not critical to understand the inner workings and differences between the stores to follow this blog, but it is very interesting (in my opinion).

At Push we publish to three main extension stores; Chrome Web Store (this lets us cover all the Chromium-based browsers including Edge and Arc), Firefox Add-ons, and the Apple Store, so these are the stores we’re covering here.

The generic process is the same for all stores. To publish an update, you first build (or package, really) your extension source, upload it to your tenant/team/org in the store, and publish it. The publishing step triggers a manual review process in the Chrome and Apple stores, and once complete, the new version appears on the extension stores. In Firefox it goes straight out immediately.

A note on the reviews; if you aren’t adding new permissions (something we haven’t seen attackers do because it triggers a new interactive approval for the end-user when the extension is updated — something an attacker wants to avoid to evade detection) then our experience is that the the manual review process is typically fairly cursory. This is likely why the checks implemented at the store level failed to discover malicious updates in these cases.

While it’s possible to do this process completely manually, developers often automate builds and include some of the deployment steps above in the build automation process — I’ll use the term CI/CD to refer to this build and deployment process in the rest of this piece. All three stores provide API keys (albeit in different ways) to enable this process.

I’ll leave it there for now, but again see the “Extension store differences” section in the Appendix for more detail.

So what's the problem with the stores?

Ok, so far it sounds like the stores are all pretty standardised, so what's the actual problem here? Why did these attacks succeed?

There are a few notable control gaps relating to the extension stores which made this attack possible, and could have mitigated it were they in place.

Despite the massive risk related to publishing a malicious extension, none of the mainstream stores provide a mechanism to implement a multiparty approval process, increasing the number of successful phishing attempts required.

Due to the lack of granular permissions in the Chrome store, any dev with access to the store could be phished. A slightly more granular permission model — for example the ability to have one developer with the permission to upload an extension (but not publish it), and another with the ability to publish an uploaded extension (but not upload a new package) — could have addressed this.

No log stream that could be easily ingested by a SIEM tool is provided, making it much harder to detect and respond.

But alas, we’re not here to complain about the stores — that’s a different blog post — we’re here to solve problems today!

I mentioned before that a multiparty approval process is key. But to understand why, it’s useful to think about this in terms of how this system will be attacked. Threat or attack models are typical approaches to doing this.

Attack model for publishing a malicious extension

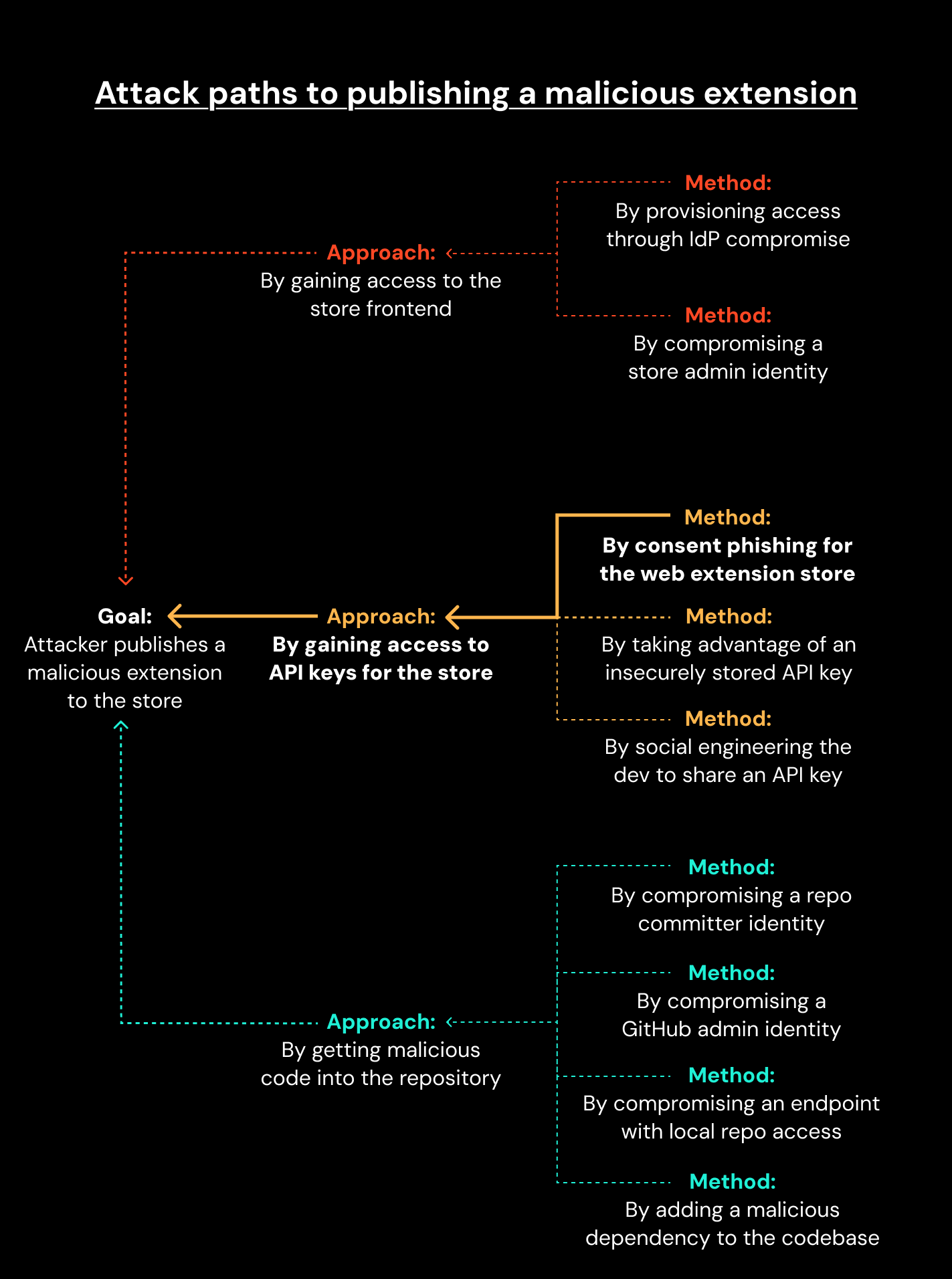

The main attack paths enabling an attacker to publish a malicious extension are outlined below.

You don’t need to follow all the minutia of these attack paths, but some things to note about these attack paths are that they all target single points of failure (a single identity, a single endpoint), primarily through Social Engineering attacks:

A single user with access to the store needs to fall for a social engineering attack for this to work (as happened in this case).

Many paths can be completed with an identity or endpoint attack, and in most cases a single identity or endpoint is sufficient.

Attacks against code repos and CI/CD flows are parallel paths, you need to trust those systems already.

So in designing a security architecture, we want to do as much to reduce single points of failure, and make social engineering ineffective (even when it succeeds).

Recommended security architecture

You could literally write a book on everything it takes to secure identities, endpoints and code repositories in general, and we’ll certainly mention some of the identity controls we think are effective later on. One thing to note here is that whatever you implement, the attack that succeeds in the real-word today is vastly more likely to involve an element of social engineering vs. for example a vulnerability exploit. This is not just my opinion (solid as I like to think that is), but also well supported by threat reports like the Verizon DBIR, with 68% of attacks involving ‘the human element’ in the 2024 edition.

In tackling attacks that involve social engineering, there are two main workable options:

Remove the user’s ability to give the attacker what they need.

Assume that at least some users will fall for the attack, and make it as hard as possible for the attacker.

You may note I didn’t include security or awareness training in the above — essentially because I’ve never seen it be effective enough to be relied on, which is not to say it’s not very useful (especially if it’s well targeted and relevant — like unpacking what happened to Cyberhaven with your whole extension developer team would be!), just that technical controls are generally more reliable.

Anyway, back to what I think makes the cornerstones of a solution.

Remove BAU access to extension stores

If developers don’t have access to extension stores, they cannot be manipulated into giving attackers access to API keys, they cannot grant attackers authorization to access the store on their behalf, and if the identities are compromised they cannot be used to access the store.

The key to achieving this is to lean fully into completely automated CI/CD processes for normal extension updates. This means that after you’ve configured the CI/CD flows, no developer needs access to the extension stores to do their normal work (publishing new versions of the extension).

Unfortunately, you will still need to access the web console manually for some tasks like updating branding, updating extension descriptions, and proving justification for new permissions (Chrome and Apple only). For our team, these tasks are infrequent enough that they can be handled using break-glass accounts.

A side note here: it might seem that you are just moving the risk around, from the extension store to the code repo & CI/CD system, but you are really already dependent on the security of these systems, so this is just removing the direct access to the extension store from the attack surface. You also have far greater flexibility and control in the CI/CD system as we’ll see in the “Implement multiparty approval in CI/CD” section below.

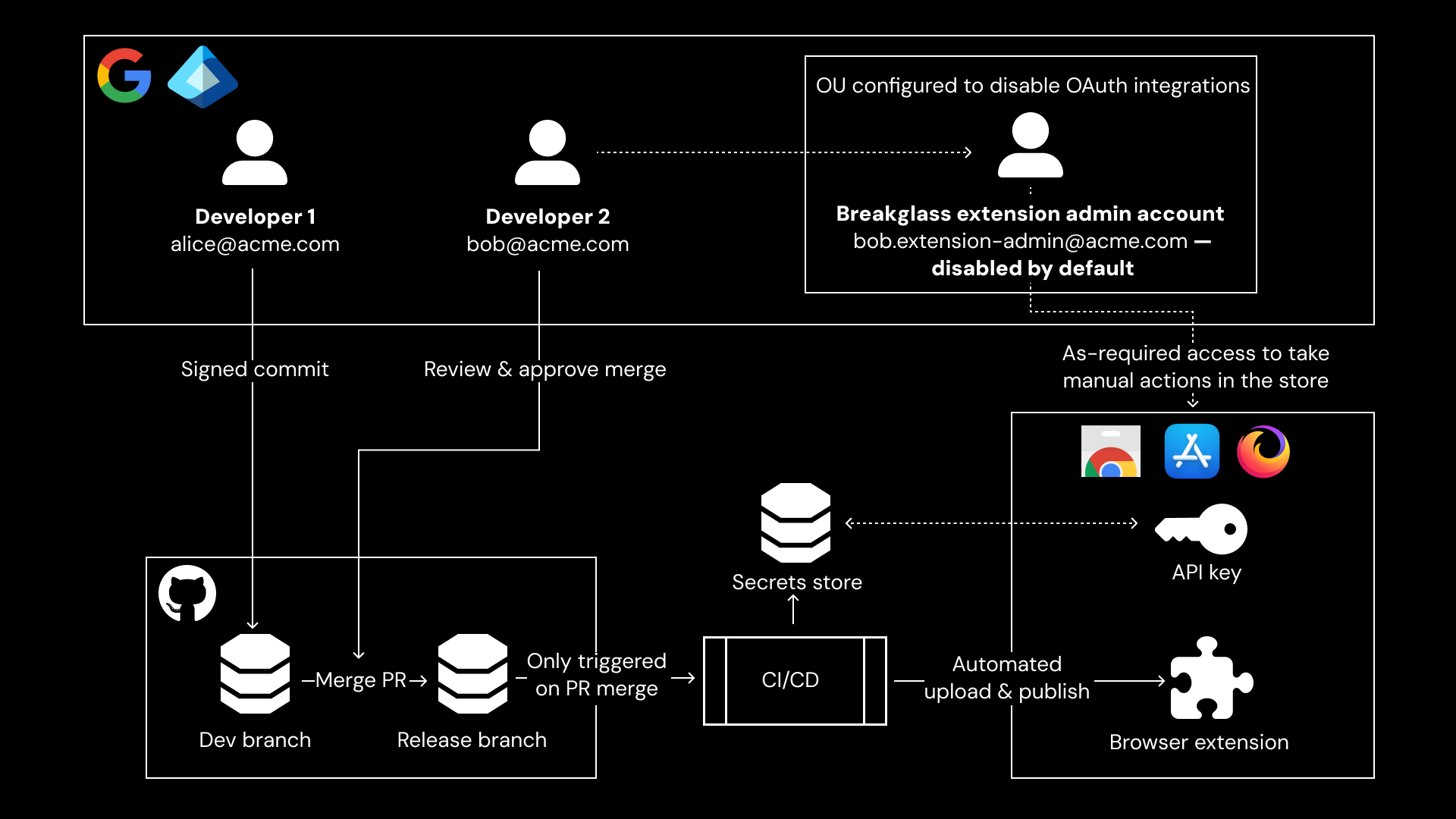

Break-glass store admin accounts

In practice you might implement this by issuing developers that need access to the extension stores a second SSO identity that is dedicated to this. You could have a john@acme.com Google account to do normal development work, and a john.admin@acme.com Google account to access the extension stores. You could also:

Make the .admin accounts disabled by default in Google, and enable one of them at a time as and when needed (this should be very rare).

Put the .admin accounts in a separate OU in GWS, and configure that OU so that those accounts are not allowed to authorize any OAuth integrations.

Ensure that all the .admin accounts use hardware backed passkeys that don’t sync anywhere (we like Yubikeys) and disable password logins.

For bonus points, make sure .admin accounts can only be used on a separate dedicated endpoint (e.g. a locked-down Chromebook).

In this way you can have a setup where an attacker would have to successfully target a developer using a hardware-backed identity during the few minutes a year their account is active, and do so without using consent phishing attacks (because all OAuth integrations are disabled for your break-glass accounts). This is a majorly tall order for the attacker.

Implement multiparty approval in CI/CD

If nobody has active BAU access to extension stores for more than very brief periods, the attacker’s next best option is to target the process that developers are using to publish, i.e. committing code to the repository and waiting for the CI/CD system to publish the extension automatically.

In practice this means the attacker would need to attack the identity (account) the employee uses to access the code repository (assuming a typical cloud hosted system like GitHub here), or sneak code in through an endpoint attack. Overwhelmingly, these attacks are likely to include an element of social engineering — whether that’s phishing credentials or session tokens, or tricking the user into downloading malware, perhaps through a malicious dependency or vscode extension.

We can make the attacker’s life exponentially harder by requiring that they successfully attack two developers, at the same time, before anyone notices. Quick intuition might make it seem like we’re only doubling the difficulty, but other red-teamers with experience doing this will agree that it’s often very easy to target a random user in a large population quickly (one employee in a large corporate), but a single user in a much smaller team (say an extension dev team) might take repeated attacks. When you need to target multiple users in a small team, in a single attack, and maintain the breach concurrently while taking actions (e.g. committing malicious code hoping no-one notices) it becomes much more likely that the alarm will be raised.

How to implement multiparty approval through CI/CD

There are probably dozens of ways to skin this cat, but I’ll share one way of doing this that works with mainstream tools and developer processes — using protected git branches.

Step 1: Setup multiple branches, these might be dev/stg/prd, or development/prerelease/release, and trigger automated build and deploy to the stores using CI/CD with PR merges to the prd/release branches.

Step 2: Use branch protection rules that require a second (or even third) named or group of developers to review and approve the PR merge. This achieves multiparty approval.

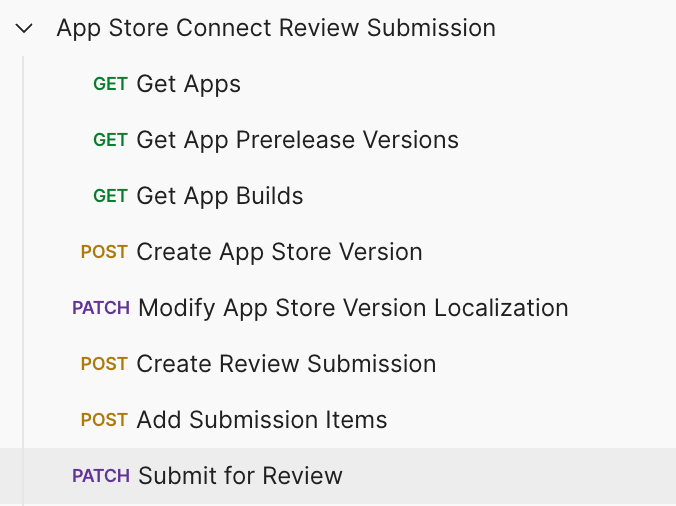

Step 3: Configure fully automated builds and deployments as part of your CI/CD flows. While this is possible for all three stores, some of the stores do make you jump through a few hoops. Take a look at the steps required to automate a publish to the Apple Store:

Since we’ve done the work of figuring this out once already, we extracted the critical steps into a companion Github repo to make this a bit easier to implement.

As we’ve described it so far, this is a fairly basic implementation, and there are several other controls you might consider to harden this process, including:

Make sure you use a secrets protection system to store Web Store API keys in the CI/CD (it’s no use if the attacker can read the API keys from a config file in your code).

Ensure that developers don’t have access to change branch protection rules, or access CI/CD secrets (otherwise one compromised developer account can undo all this good work — let DevOps or other admin users that are not extension developers handle this admin).

Enforce hardware-backed signed commits as a condition for PR merges (this makes it very very difficult to get bad code into the repo without also compromising your dev team’s Yubikeys)

Now you have strong hardware-backed multiparty authenticated deployments to the stores, and should end up with something that looks a bit like this:

The next best attack path — IdP admin compromise

Once developers don’t have direct access to the stores, and you have multiparty approvals to get code into CI/CD, the next best attack paths are to target other single-points-of-failure — most likely the administrators.

This might be the IdP (Google Workspace, Entra, Okta, etc.) admins, which can then be used to provision access to the stores, or simply recover one or more of the developer or break-glass accounts. Or it might target the code repo or CI/CD (GitHub in our example) admins which have access to API keys and can change branch protection rules.

Managing privileged identities like these admin accounts is a constant challenge, but continuing what is perhaps the central thread of this blog, identity attacks (likely through social engineering) are going to be the first port of call for an attacker.

Recommendations for hardening admin identities

If there’s one thing we know here at Push, it’s identity security — but I’ll fight the urge to go into too much depth with generic recommendations, and focus on where there are opportunities specific to this scope.

One of the most critical aspects of securing these admin accounts is making sure that they are phishing resistant. Where possible, you should be using phishing resistant MFA methods. Typically this means some kind of domain bound security key using the WebAuthn protocol — a passkey using your fingerprint reader is good, something like Yubikey is great. I think this is pretty well understood, but where it goes wrong most often is when backup methods and alternative login methods exist. For example, you might be using an Google OIDC login secured with a Yubikey to access the Firefox store, but not realize that this account also has a password to set that doesn’t have MFA, or has phish-able MFA like SMS or an app-code set.

Attackers are increasingly using attacks that downgrade MFA methods (so the attacker will request the least secure active MFA method when phishing you, rather than the strong method you might use day-to-day), and this is completely automated in modern MFA-bypass phishing kits.

Warning, product plug coming 🙂 — what we do at Push is help you identify issues like these at scale, across all admin, break-glass, dev, and normal user accounts. We also block credential phishing by detecting when users try to enter their SSO credentials on the wrong page, detecting session theft, and can even monitor when credentials stolen via infostealers show up on underground forums.

Going even further to harden extension deployment

This blog is already getting way too long, but there are a lot of other controls that can really help harden extension deployment — if there is interest I might go into detail in a future blog post, but for now let me just mention some of them.

Multiparty approvals for Google

If you’re going to do multiparty approvals for extension deployments, then enabling this for admin actions that protect that infrastructure seems like a no-brainer.

Google allows you to enable multiparty approval for sensitive actions in Google Workspace. We wish it was a bit more granular, and covered more configurable actions — but it’s an awesome start, nice work Google!

Admin workstations

When we used to do red-team exercises, one of the most challenging controls to work around was when the admin accounts we were targeting were only used on dedicated admin workstations. Ideally those workstations would do nothing except admin tasks, and the accounts would be locked down, so in this case that might mean:

No email access

No extensions

No OAuth apps

This becomes incredibly challenging to attack — but it does come with some obvious painful UX impact for admins, so I don’t think this is a no-brainer for everyone.

Isolate support emails

Sending your support emails to extension developers creates a direct path to start social engineering — something attackers used to great effect in this campaign. If your developers are not also your frontline support team, consider ringfencing developers from that public support email group so attackers have to at least do some reconnaissance work to identify the developers to target.

Detection and response

As always there are a myriad of things that can be monitored. We think high value would be doing things like:

Checking whether new versions of your extension appearing in the store is directly related or caused by the CI/CD process, and:

Alert if there is no direct link here.

You can configure email alerts to trigger this automated check.

You could consider immediate automated roll-back to a previous version of the extension if it wasn’t published via the CI/CD system.

Any activity on break-glass accounts — these accounts should only be used after they are activated by admins to complete a specific task, so this is an obvious alert to configure.

Unusual activity on service accounts — this is a bit of work to profile, but very valuable.

Our request to extension stores

I’ll use this opportunity to make an open request to the browser extension stores for a couple of features that I think would really benefit the entire ecosystem:

Add the ability to configure an explicit multiparty approval process (and show the public which extensions have enabled these controls!).

More granular permissions or roles (e.g. only edit descriptions, only only upload, only publish, only accept new terms).

Better logs and monitoring – making it easier to ingest events related to your extension via the store into a SIEM would make alerts much easier to configure.

Enforce stronger default identity security controls (even if only for risky or popular extensions) — we enforce MFA by default for GitHub repositories now, it’s about time that we require MFA to access an extension store as well.

Conclusion

We’ve seen in the past that the successful use of new techniques seem to inspire other attackers and lead to many similar attacks, so the smart money is on this happening again.

There is lots to work needed to secure this process, and hopefully this blog has provided a starting point. We’d love to hear from you — let’s start sharing some ideas around hardening this process even more!

If you're a customer rather than an extension developer, this guide hopefully gives you a sense of the supply chain attacks that are likely to happen in the future. Asking your vendors which steps they’ve taken to prevent these attacks might be a sensible addition to your vendor risk assessment process (when the product includes a browser extension).

This kind of due diligence is viable where the developer is a vendor you have a commercial relationship with, but is a non-starter when it’s an extension that’s offered for free by well meaning open source developers. In these cases a sensible response might be to require approvals for new browser extensions, a technical risk review based on (at least) the permissions the extension is asking for, and managed browser policies to control and further limit what some or all extensions can do. For example, you may decide to block access for extensions to your IdP’s domains to protect your SSO accounts.

We’ll be releasing guidance on how to manage third party extensions used in your organization in the near future — subscribe to our mailing list to be notified when we do.

Appendix: Extension store differences

We covered the general process of publishing extensions to the different stores in the “Primer on extension stores and the publication process” section above, now let’s talk about the differences between the stores. Let’s start with how they provision for automated deployments.

Automation keys

The Chrome Web Store allows automation through an OAuth app. As described in their documentation, the process is for a developer to create a custom OAuth app (a client on OAuth speak), then a user with access to the store authorizes the OAuth app to access the chrome store on their behalf using the https://www.googleapis.com/auth/chromewebstore scope.

If this sounds familiar, that’s because this is exactly what attackers tricked developers into doing using their own OAuth app in the Cyberhave campaign. In the normal flow, the developer then uses a service key linked to the OAuth app in their CI/CD flow to automate the deployment process.

The situation is a bit simpler for Firefox and Apple, which both work by developers just creating simple static API keys, though Apple does allow you to create personal API keys linked to a single account (and that account’s permissions).

Accessing the store

In a business environment, using SSO to access apps is extremely useful as it simplifies the provisioning and security-ops work of maintaining secure identities — and often provides more secure authentication methods (e.g. hardware backed WebAuthn MFA) than the target app does (as is the case for the web stores). It also simplifies and centralizes the ability to log and monitor the use of these accounts. I can’t recommend the use of strong SSO authentication enough in cases like this where ensuring you have the right controls in place is paramount.

Fortunately all the stores provide SSO login methods. For the Chrome store, users login (only) using Google SSO accounts — and if they are part of a Google Workspace, access can be provisioned through membership to a group. Firefox allows access using a username and password, but also offers OIDC SSO logins through Google or Apple accounts. If you make use of Managed Apple IDs, Apple offers OIDC SSO authentication as well.

For Chrome and Firefox there is no real concept of roles (or nothing really useful), and you should assume any user with access to a team in your account has the ability to publish extension updates. Apple offers more granular roles and permissions - and there are low privileged roles that can’t publish updates.